How to compare AI chips

July 17th, 2020

The demand for AI chips is driven by use of deep learning

When I started working as an industry analyst 17 years ago we were taught it was bad form to title an article as a question, but this one is begging to be called “So, how do you compare AI chips?” and the answer is: with great difficulty.

The field of semiconductor chips is not only a mature one but it is an amazing success story: from the day the semiconductor junction was invented in the early 1940’s it has been a steady march to the comparative supercomputer in your smartphone. Then AI came along, and it really took off with deep learning (DL) and DL needs a hardware accelerator to run on. This AI chip demand is worth over $5b annually and growing.

It should be clarified that in the broader field of AI, other than DL, there is less need for an AI hardware accelerator. For example, in the field of intelligent virtual assistants, talking with many vendors in that space, several are using novel AI algorithms that are not DL based and can run on the humble CPU. Actually, the modern CPU is not so humble, they are improving year on year, with attention to satisfy AI workloads (of the DL variety). We advocate making comparative tests of the whole application and not just the AI compute element, when selecting the right hardware. If the application, such as a web shopping recommender, has a high volume of data traffic between CPU and its accelerator, there are use cases when it may be faster to keep all the computation on the CPU.

The types of AI chips

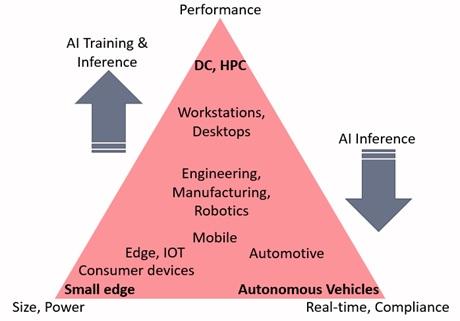

However, DL computation does require an AI chip and the question is how does the chip market break into segments. One way of examining this question is to look at where the applications are run. In our recent vendor product comparison for the Kisaco Leadership Chart (KLC) on AI hardware accelerators 2020-21, we worked with 16 vendor participants and found that their products naturally fell into a triangular space – see Figure 1. The vertices of the triangle represent extreme characteristics of the required accelerator. Training mostly takes place at the upper end of the triangle in the figure and AI inferencing mostly at the lower end but note that the characteristics described at these vertices of the triangle are not mutually exclusive.

- Performance: In this corner of the triangle what matters most is sheer compute power. It is where AI training mostly takes place in environments such as data centers (DCs) and high performance computing (HPC) machines. AI inference is also important in the DC.

- Size and power: In this vertex the constraints of small size and low power consumption are paramount. These variables also relate to cost of the AI chip and low cost of the overall product unit. We have named the exemplar application environment as the small edge.

- Real-time, compliance: Here the need for real-time response, i.e. ultra-low latency, is a matter of safety critical concerns, and this area of AI activity is highly regulated. Autonomous vehicles represent the exemplar application. applications for AI hardware accelerators

Figure 1: Market segment applications for AI hardware accelerators

Source: Kisaco Research

Given the market make up shown in the figure, the type of chips that represent the ideal characteristics become evident: e.g. small edge you need the tiniest of chip, at the lowest power consumption and the highest possible performance within the constraints.

There is no one AI chip to rule them all

Each of the market segments described in Figure 1 has its own set of ideal characteristics and the players in the field have to decide which part of the market they want to compete in. The reports listed in Further Reading go into more detail. I will end with this thought: is it all about specifications or do other dimensions of the AI chip player’s business matter as much or at least some. For sure the spec table matters a lot but consider that actual applications have peculiarities that only tests and proof of concept on actual chips running the applications will flush out. There are also a host of optimizations performed in software and hardware on the DL models that result in less computation required, and AI chip makers use such techniques to great advantage, making the theoretical spec table only a starting point. There are other dimensions to the business, such as development of the software stack, opportunities to expand the use of AI chips in new markets, and the support of customers to innovate (not all business users of AI chips have an AI background), these aspects matter in how successful the AI chip maker will be.

Notes

- Kisaco Research is the company behind the AI Hardware Summit conference series. For more details click here View the agenda to find out more.

- We have just published the first AI chip analyst chart: Kisaco Leadership Chart on AI Hardware Accelerators 2020-21. See here for more information: https://www.kisacoresearch.com/kr-analysis

Further reading

MLPerf, MLCommons, and improving benchmarking, Meta Analysis: the KR Analysis blog, KR320, July 2020.

What we are trying to achieve with AI analysis at Kisaco Research, Meta Analysis: the KR Analysis blog, KR321, July 2020.

Kisaco Leadership Chart on AI Hardware Accelerators 2020-21 (part 1): Technology and Market Landscapes, KR301, July 2020.

Kisaco Leadership Chart on AI Hardware Accelerators 2020-21 (part 2): Data Centers and HPC, KR302, July 2020.

Kisaco Leadership Chart on AI Hardware Accelerators 2020-21 (part 3): Edge and automotive, KR303, July 2020.

Kisaco Leadership Chart on ML Lifecycle Management Platforms 2020-21, July 2020.